Emotional Intelligence As The Human Edge

December 10, 2024

Hosted By

Dan Sullivan

Dan Sullivan

Gord Vickman

Gord Vickman

Dan Sullivan and Gord Vickman examine the intersection of emotion, artificial intelligence, and human consciousness—and explain what will always set humans apart. What does it truly mean to be conscious in a world increasingly overwhelmed by technology?

Show Notes:

- The entire human experience of being an individual is consciousness.

- The scientific community still doesn’t understand why humans are conscious or how to measure consciousness.

- By 2026, experts predict 90% of online content could be AI-generated.

- AI lacks the multi-sensory and emotional depth of the human experience. It can never fully replicate human consciousness and interaction.

- Technological advancements often fade as fads because they can’t substitute the richness of human interaction.

- Humans tend to project significance onto new technologies that simply isn’t there.

- Google Images has started delivering AI-generated results.

- AI is a capability, and it becomes increasingly more useful the more you view it through this lens.

- Our ability to advance AI technology is limited by the physical resources this technology consumes.

Resources:

Emotional Intelligence: Why It Can Matter More Than IQ by Daniel Goleman

Thinking About Your Thinking by Dan Sullivan

Episode Transcript

Dan Sullivan: Hi, everybody, it's Dan Sullivan here, and I'm here with Gord Vickman, who is also the podcast manager of all things Strategic Coach. We have many different podcast series. This is the Podcast Payoffs. And this is an episode, and Gord, this is actually a recurring theme. I don't think we've actually hit it directly like we are today, but it's on the topic of emotion and AI, or emotional intelligence, if we want to put it in its proper context here. I've been following this, it's not so much I've been following this, but I've been following the inability of the scientific community, and I've been following this for 40 years, to have any real insight into human intelligence, not human intelligence, but human consciousness. Okay, and as much as they feel that one day that computers will be conscious, they don't have any comprehension yet of why human beings are conscious. Every human being that I've ever interacted with has at least moments of consciousness. The entire human experience of being an individual is consciousness. You live in a world that's strictly your own world that nobody else knows about. morning till night, and also at nighttime. At nighttime, you're in an altered consciousness at nighttime, but you're in a conscious world. So you sort of twigged this topic for this particular podcast. What led you to put this down as one of our current podcasts that we were gonna do this with?

Gord Vickman: There were a few things, actually, Dan. First of all, great to be here with you again, as always. There was a funny comment that I saw on Reddit, of all places. Reddit is normally a swamp heap of not funny comments and lunacy. But there was this woman and she said, I want AI to do my laundry and my dishes so I can write and do art. I don't want AI to do art and my writing so I can do the laundry and my dishes. I thought that was sort of clever because that's sort of where we're going right now. And then I started reading a little bit and I started asking, ironically, AI. I said, how much content out there right now in terms of podcasts, blogs, artwork, online, is currently created by AI? And it said, well, we don't know for sure how much is out there. It's not like there's a scoreboard, you know, like a ticker where people are going, oh, it was 48% yesterday and it's 49 today. But they say that some people would guess that in a few years, we're talking 2026, almost 90% could be AI generated or AI tweaked.

And just to add a little cherry on top of this, someone had posted online that they were on Google and they were doing the Google image search and they were looking at photos of baby—what was it? Baby flamingos or baby parakeets or something? It was a baby bird of some kind, okay? And all of the top image results that came back were showing AI-generated images of what that baby bird would actually look like. So, the image search now is showing AI-generated images of what the AI would think that the baby flamingo would look like, but that's not actually an animal. So it's like, okay, the ball has started to roll down the hill now, because anybody who Googles what does a baby flamingo look like is going to get an AI-generated image, and that's not actually what they look like at all. So, that's sort of a roundabout way of where this inspiration, why I started thinking about it. It was a Reddit comment that it all kind of snowballed together.

And then I started reading a little bit about emotional intelligence, like where it came from, just for historical context, coined in 1990 by psychologists Peter Salovey and John Mayer. And then the book by Daniel Goleman, Emotional Intelligence Could Be Greater Than AI. So, we're deferring a lot of the things that we do and that we create right now to AI because it's easier, faster, cheaper than to think of it ourselves. But what could be lost in the process of that? And then I know that you've been a huge proponent all the time of the humanization of what's going on and the content that Coach is producing has a very human element. So I wanted to sort of bring this up with you on the podcast to ask you, you know, a lot of things about it to get your thoughts, because I think the audience would enjoy that as well. So when did this concept sort of come to the forefront for you, because when I wrote this plan for the podcast, Dan, your ears kind of perked up. You said, oh, I have a lot on my mind in regards to emotional intelligence and sort of where we're at right now with the AI contribution. So what are your top line thoughts?

Dan Sullivan: First of all, it's a capability. I think AI is a capability. And it's another capability. And it's more impressive because the computer speeds are getting more impressive. And I think the software, you know, our mastery of software keeps getting better and better. And I think that speed, technological speed, has a magical quality about it. I mean, they aren't anymore, but when movies first started, it was just the fast showing of a lot of still frames. There's a point where the films would become almost like real, like it would happen in real time. It's a magic experience, and I remember once you know, going to the first 3D movies where you wore glasses in the 1950s. And, you know, you had the dimensionality, you know, it wasn't just a flat screen you were looking at. There was some sort of experience of dimensionality, but it faded out and it's made attempts to come back, and generally speaking, it doesn't last very long. We feel constrained. Instead of just going in the movie and watching the movie, you have to get the glasses at the door, and you have to drop them in a box on the way out. There was 70 millimeter, like IMAX. You know, you had a sense it was much clearer.

But I noticed that these are fads. And they die out after a while. And I remember when first virtual reality came out with the Oculus. First of all, it took a half hour to try to figure out how to wear the Oculus correctly. And then you had to push this button and this button and this button to connect with the virtual reality production. And it was of going on a house tour, you know, going into a house and walking room to room. And other people were there, too, who were also watching the Oculus. They were there. And I watched it for about two minutes and I said, well, I'm not a good target audience for this because I wouldn't do it in real life, go on a house tour, so why would I do it in virtual reality? And then I never did it again because it was just too much work. Plus, I think it's weird to wear the disc.

So I think to a certain extent, computer-generated productions, and AI is a computer-generated production, lacks the other senses besides hearing and seeing. I think it's that much restricted. It can't imitate human intelligence because we have touch, we have smell. The other thing is we have a real spatial awareness. And I think the other thing is we have an emotional awareness when we're with other human beings, there's very definitely an emotional field there, you know, and we have our likes and dislikes according to our emotional response to other people. Unless you have a fully immersive sensory experience, but the sheer amount of electricity you would need for that I think is probably a thousand times greater than what's being demonstrated with AI programs where you're just looking at your computer screen. I mean, that uses a ton of electricity.

Anyway, I don't know if there's enough electricity for a while to go too much further than we are right now. A NVIDIA chip, which is the big player in the AI in just the last two years, since OpenAI with ChatGPT, a NVIDIA chip uses as much energy as three fully used Tesla cars going for an entire year. That chip in a year will use three times more energy. Well, they've got five million of them out there, and we don't have the electrical capacity to do it. So there's a lot of restraints to this just from a physics standpoint, just from a physical standpoint. But the other thing is, with all that, I don't care how realistic it becomes, you haven't in any way addressed what human consciousness is. I say science, in 40 years, I've followed this subject for 40 years, they are no further ahead at understanding what human consciousness is than they were 40 years ago. And they weren't anywhere 40 years ago. And there's still no understanding. How is it that each human being has this interior world that nobody else really knows is going on? I mean, we express feelings, we express thoughts and everything else, but that's an interpretation of what's actually going on. And I see no evidence that science is ever going to crack the code on this because it requires measurement and there's no measurement of consciousness. There's no way of even understanding what's going on.

Gord Vickman: Did that come to you at a young age? Because that sounds eerily similar to the thinking about your thinking. It reminded me of that. Was that an eight-year-old realization you've carried with you through life?

Dan Sullivan: Yeah, yeah, I could be on my family farm walking from the house down a long pathway to the woods, and I could have this experience that I was experiencing my thinking, you know. No, I thought it was neat, and I thought maybe everybody else did that, but I would talk to sort of in a stealthy kind of way, I would say, do you ever have this experience, you know, that you can actually experience yourself thinking the thoughts that you're having right now? And there wasn't a big response to it. So I thought, hmm, maybe this is a unique advantage. Maybe someday I can create a program.

Gord Vickman: Yeah, let me know how that goes.

Dan Sullivan: So far so good.

Gord Vickman: Dan, I'll tell you a really quick story of something that I don't know if I've shared with very many people, if anyone. I might have mentioned it off the cuff to a few people, but you just reminded me of something. So when I was very young, I'm talking maybe six, seven years old, I remember when I would go to bed. This is, you know, my childhood bedroom and my parents would close the door and I'd have my Spider-Man poster on the wall and you could see just the shadowy silhouettes of everything around me. I remember on more than a few occasions sort of staring up at the ceiling in the pitch black. And these thoughts would go through my head. I couldn't really organize them, but I would ask myself this question. Why am I alive? Of all the people that could be alive, why am I alive right now? And then I would sometimes whisper and repeat my own name.

I don't know what I was trying to do. I don't know what I was trying to uncover. But I thought it was quite strange that of all the people that could be alive, I was alive and I was able to think these thoughts. And I would almost go into like a trance. And it was very bizarre. It was confusing to me as a child. But in that moment, I was trying to figure out, again, I was seven years old, eight years old. I had no context or anything. This is not me trying to levitate. But there was this thought that was going through my head, trying to figure out of all the people that could be alive right now and thinking these thoughts, why me? Why am I thinking this and why am I alive right now? And I was never able to answer that question. I don't know what I uncovered, if anything. I don't know what it means. But it was almost like a metaphysical experience for a very young child to be sitting there wondering, what is consciousness? I wouldn't have called it that at that time. But it was just me lying in a pitch-black room going, why am I alive? And I didn't tell my parents because I thought I might get in trouble. I didn't tell my sisters because I thought they would call me weird. I didn't tell my friends because I thought they would call me weird. But I didn't know at the time if anyone else had these kinds of thoughts.

Then I got older and I was like, am I mentally ill or something? Like, why was that going through my head so much as a young child? I don't know if other young people had that experience because I was too shy to share that with other people. But then I met you and you were telling me about these walks in the woods around the farm. And you were saying, like, I used to think about my thinking and why am I thinking these things? And then now that it was just brought back up, I was thinking, okay, well, maybe there are a lot of young people out there just as their brains are developing, they're starting to think about what does human consciousness mean? What does emotional intelligence mean? And what does that mean as we start deferring all of this work over to AI and having it do our thinking for us? Could an AI maybe answer those questions? Are young people going to be putting that prompt into, you know, GPT and Perplexity? And what is it going to say? Because it's just going to be drawing off the learning that it's had in the past. But it's not going to be answering that question because, roundabout, AI is not conscious.

Dan Sullivan: Yeah, well, I would say the way you've described it is similar to, you know, what I experienced at that age. But I was testing around. I wasn't scared by it. I mean, actually, I found it sort of exhilarating, you know, that you could do that. And the other thing, I couldn't do it at will. It happened to me. In the first days, it was frustrating to the degree that I would experience it for a few minutes and then it would go away. And I really liked the feeling, but I couldn't figure out how did it happen. But it did lead me, I think, along the lines to my coaching experience. And what I realized, it isn't one thing, it's a series of prompts in the strategic coach world. Our coaching is really a prompting. It's not really a coaching process. It's a prompting process where—I created a new tool and I did a workshop yesterday. And this is a two-hour workshop that's done on Zoom. And the tool was called Strengthening Your Strengths. And I said, you know, this possibly is the greatest entrepreneurial capability is, first of all, just understanding where you're really strong, and then doubling down on that. Rather than trying to be strong everywhere, you just double down on where you're strong and just make what you're doing more productive and more profitable if you're an entrepreneur. So I sit there and I said, so first of all, just check out all of your strengths. And with every one of the tools, I fill in the tool and share my filled in tool. These are my strengths. And there's a brainstorming column. And then there's a priority column where you pick the top three. Maybe you've put down 10 things in the brainstorming and you pick three of them, but they're strictly into their own world when they're responding to my prompts. I prompted them into their thinking about their thinking. They're seeing their experiences. They're writing down their experiences.

That's the first half, but in the second half is next 12 months strengthening. And then you say, well, these are your strengths right now. How are you going to strengthen your strengths over the next 12 months? And again, they're in their own world. They're seeing their own thing. They pick the top three. And then when they're finished at the bottom of the page, this is a standard 8.5 x 11 sheet in North America, different in the UK. It's metric in the UK. What are your three biggest insights from the thinking you did above? And again, they're in their own world. So I prompted them into thinking about their thinking. And start to finish, that's what The Strategic Coach is all about. It's really prompting you to think about your thinking and accessing experiences that only you know, past, present, and future. It's really interesting. But what I've learned is to ask questions that immediately the person goes into their own experience. And I think that's consciousness. I think from my early eight-year-old experience to my 80-year-old experience today, I've learned what the prompts are that get people into this world. And a lot of them report that if Dan is not doing the prompting, they can't prompt themselves. So they renew for another year, which, there you go, you know, I'm not going to push this any further than that.

Gord Vickman: Mission accomplished. Don't sell past the close. Once you get the yes, just say you get them in the close. Don't sell past the close. Just hang up the phone. Dan, you said prompt. And that's a great segue because we've been talking about, you know, emotional intelligence, consciousness, and then how AI plays into that. There's a story you told me, which I thought was really it was smart and it was kind of cute, too. So the story is that you actually asked Perplexity The Dan Sullivan Question. You were just tinkering with the AI, and it was almost your effort to see, can I humanize this thing a little bit? I just wanted you to share that anecdote of what happened when you asked Perplexity. First of all, Perplexity is an AI, very similar to GPT, but it can search the web. If you don't know what Perplexity is, Perplexity.ai. So Dan, first, just to lead this off, what is The Dan Sullivan Question? And then when you put The Dan Sullivan Question in Perplexity as a prompt, what happened?

Dan Sullivan: Yeah, the question is perhaps our best prompting question that we've ever created. And so if it was, I'm talking to a human, I say, if we were having this discussion, and it was three years from today, and I named the date, so it's today's date, but three years in the future, I say, if we were having this discussion, and you were looking back from three years into the future, back to today, what has to have happened over that three-year period, both professionally and personally, for you to feel happy with your progress, okay? Some people won't answer it. When I came up with it, they won't answer it. And the reason is they don't trust you. It could be they don't trust themselves. I don't know because I haven't gone too deeply into when they don't answer the question. I just know that there probably isn't going to be anything happening in my relationship with that person if they don't answer the question. But I said, you know, I'm being told that these AI programs are human-like.

So I asked Perplexity, I wrote it out. You just go on Perplexity and it says, ask me. And I asked him, it says, Perplexity, and I used the name, Perplexity, if we were having this discussion, same thing as I asked the humans, three years in the future, I didn't say business and personally, I just said, in terms of your progress, what progress will make you happy? And pew! 10 answers just like that. I mean, it was very fast. I've never really counted, but it can't be more than three seconds that you have the answer. And that shows how fast the computing is. But they have access to everything that's been written about Perplexity, including statements by the Perplexity company to the public, position papers, their patent applications, everything. So it has complete access to all that information. It understands the words that I used to ask the question, puts the two together, comes up with the answers. Okay. And then I said, okay, tell me 10 obstacles now that could prevent you from making that progress. Just like that. And then I said, so Perplexity, how do you feel about answering the question? And it said, you know, if I accomplish everything that I stated above and overcome all these obstacles, I'm feeling very, very good about my progress. I thought, that's a good answer. But here's the thing—it has all the language it needs, it has all the information, it has all the language to ask the question. It understands my question, but nowhere is there any consciousness. It's just doing the way it was programmed.

Gord Vickman: And it can't feel good. It's just telling you what it thinks you want to hear. It was being polite.

Dan Sullivan: Yeah, it was. It was being cordial, you know, it was being congenial, you know. And I find Perplexity a congenial interface.

Gord Vickman: Me too. We've chatted on the show before.

Dan Sullivan: It has a nice tone about it. I, as a human, like the experience, so I'm going to do it again. Yeah.

Gord Vickman: Perplexity seems like your nice aunt that says something that's factually incorrect, maybe at the Thanksgiving table, and then, oh, just heartbroken that she gave you some misinformation, whereas ChatGPT is like, ah, go pound sand if you don't like my answer. And then Perplexity is like, oh, sorry, sorry about that. Sorry, sorry. It's almost Canadian.

Dan Sullivan: It was very interesting, and I have three or four times asked a question, prompted, where it came back and says, I'm sorry, I really don't have enough information to give you exactly what you want, but here's the best I can do, and it'll give you some information. So it's got a truthfulness about it where it doesn't make up information where there's no information. I find that pleasing in a human and I find that pleasing in an AI program. But it's just a capability. I don't have the sense that I'm talking to a person, nor do I expect the AI capability to act like a human. It's designed to be an answer machine and it works as an answer machine. But I don't go wonky about it.

What I notice is, and I'm going to bring up the subject of religion here because they've done tests where they can put people into like a meditative state. Frequently when people go into a meditative state they have a spiritual experience and they actually have lab technologies where they can put on all the sensors and then they raise the vibration level or whatever they're doing up to a certain level and people almost uniformly have what they would talk about a spiritual experience or a religious-related experience. But what they've found is if you do it with a Hindu, all the images of Hindu religion come up. And same thing with Buddhist, same thing with Islamic, same thing. The images that come up are peculiar to the religion that you have familiarity with or the culture that you're in. So, it's not all religion, it's just the religion that, you know, you have experiences or you're familiar with. Okay, so that's just one piece of information I'm going to tell you. That early, you weren't tapping into a universal, if there's a universal religion, because there is no such thing. There is a universal sense of religion, there is a universal sense, but you interpret it with the images and the visual aspects of religion and everything else. And I found that very, very interesting.

But the other thing is, there's a lot of people who haven't had actual religion, okay, as children. You know, I was marinated in Catholicism over my first 30 years. It was like grand opera for me because most of the time it was in Latin. It was much more impressive when I didn't understand the language. You know, it was like grand opera. They had vestments, they had incense, they had stained glass windows, they had Gregorian chant music. You can imagine what it was like in the 13th century when people were getting a full dose of this. Must have been like a Taylor Swift concert, you know, I mean, really. I grew up in that, so to me technology is just a capability, but I noticed people who haven't had religion, they start doing religious things and making all sorts of claims for the technology, because it's the closest that they've ever gotten to a sense of miraculous. You know, so the speed of technology seems almost miraculous. Therefore, this is the Messiah coming to save us, you know, this religion. I said, no, it's just another capability. It's just advanced calculation. And they're using large language models now as the source, whereas calculation was all math. The first calculation was just math. And all it is, is really fast math, but it's a million times faster than an old calculator, maybe 10 million times faster than the old calculator. And people do things, they project their desire for some religious or spiritual experience on a piece of technology or a technological capability. And I just leave it there. I say it's just a capability.

Gord Vickman: Yeah, and there's certainly been a lot of, you know, humanizing because what a lot of people—and I'm speaking directly about young people like Gen, not so much Gen Alpha, they're too young, but like Gen Z, they're using ChatGPT more so for therapy than just about anything else. Like, tell me what's wrong with me when I just feel so sad all the time because it's cheaper than paying a therapist $130 an hour. They're just going on ChatGPT and Perplexity and trying to work out their mental turmoil. On a more fun topic, in terms of finding out about yourself, there's this trend that I read about on Twitter. I know it's called X, I'll never call it X, it's Twitter. I still call where the Blue Jays play the SkyDome, and it hasn't been called that in 20 years.

So Twitter, people are going on, they're saying, okay, this is a really fun thing you can do. So if you have a paid account for Perplexity or ChatGPT, there's obviously a log of everything that you've ever asked it or contributed. This is fun, if you have a paid account. Go into your GPT account, here's the prompt you put in, or you can do a Perplexity too. You ask it, take everything that has ever been written in our interactions, tell me something that I don't know about myself. And it will go through everything you've ever contributed, every prompt, every question you've ever asked it. And then you can go further and you can say, tell me something that I'm really good at that I might not know yet or even realize. Tell me something that I think I'm really good at that you suspect I'm not as good as I think I am.

And people are posting the replies and they're really wild and they're fun and they're funny. And people are like, wow, this is something that was just never possible before because there's a log of every interaction I've had with, for lack of a better descriptor, like a heavenly entity that is this omnipotent being in your life that has recorded everything you've ever asked at your hopes, dreams, your insecurities, and it will be able to tell you what you're maybe not as good at as you think you are. And maybe that will or maybe something you are better at than you think you are to help propel you to the next level. Confidence boost, confidence destroyer, who knows? But it's all there available to you now in your paid account. And if you have one of those, give it a shot and see what it says.

Dan Sullivan: Yeah, well, you know, I can come up with some historic analogies of the same experience. One of them is mirrors, when they really, really got good mirrors. It took a long time because it's not just a shiny piece of glass. It's chemically treated with an absolutely flawlessly smooth surface. And it just took an enormous amount of glassmaking technology before you could get this. And they said that this was transfixing for people looking in the mirror. And of course, it was usually just wealthy people and important people who got mirrors. And they said they would spend hours just looking at themselves. And they were discovering things about themselves that they never knew before. They may have had paintings of themselves, but it wasn't like actually seeing their actual image and they could move and they could turn sideways. And my sense is they were having a transformative experience because they were seeing themselves in a way that had never been possible before. Okay, so that's one example.

There's another thing happened when printing started. Gutenberg in 1455 is the year of his first press. And all of a sudden, reading had, except for a few In Europe, the monks, you know, they would be in monasteries, and they would have books. But generally speaking, reading wasn't a private experience. You had someone who knew how to read, and they would read out to a large congregation. It might be church, it might be political or anything, and they would read out something. But it was a rare skill. But once you had mass printing, people would have books and they'd go off by themselves. And they would have this relationship with the words on the page, and they would have all sorts of thoughts, but it was somebody else's thoughts. And apparently it was very, very transfixing. One of the things they discovered that most people were farsighted, and so … the whole glass industry exploded at that time. Fortunately, Venice was the real glass capital of the world and they could create lenses. But what happened out of it was a very interesting psychological breakthrough.

So 1455 is Gutenberg, so you have 100 years, 150 years of people reading. And all of a sudden, a playwright named William Shakespeare starts putting people on stage. And this is the first time it ever happened. In all of theater, it never happened that characters start talking to themselves on stage. Hamlet, Macbeth, Lady Macbeth, Othello, you know, all these characters start talking to themselves. And there's a thesis, and I think it's quite a plausible thesis, that it was only when they started reading and having this relationship with their thoughts that they would have a conversation with themselves.

So Shakespeare just took it, we're just going to have the characters talk to themselves. I mean, I went to see Hamlet, and it's the most monstrous role that anybody has, because the actor is on stage for literally the whole play. I mean, it's got more lines for an actor. And I was just transfixed, first of all, how he could remember all this, you know. But he was sitting there, and he would just go on for 5, 10 minutes on a monologue, and he would talk to himself. And you really had the sense that there were two of him. There was one talking, and the other one talking back, and everything like that. Well, that seems sort of magical, and it would, you know, if you had never seen it before, the crowds who went to the Globe, or one of the other London theaters, they must say, he's talking to himself, you know, and everything else. So use the mirror experience and the monologue experience. That was as magical to them as the magic that a lot of people are experiencing with AI. But it was just a new capability.

Gord Vickman: That's a magical way to wrap this episode, Dan. It's just the latest tool. It's just the latest thing. For a spiritual experience that you might not even realize.

Dan Sullivan: And in a hundred years, it'll be much more magical than we can appreciate today. And we still won't know what consciousness is.

Gord Vickman: I have nothing more to add. That was the beautiful conclusion to this episode, Dan. Thanks so much for joining us today on Podcast Payoffs. If you liked this episode, if you had a spiritual experience, please share it with someone that you feel would have the same. Dan, thanks so much. Until the next.

Dan Sullivan: Thank you.

Related Content

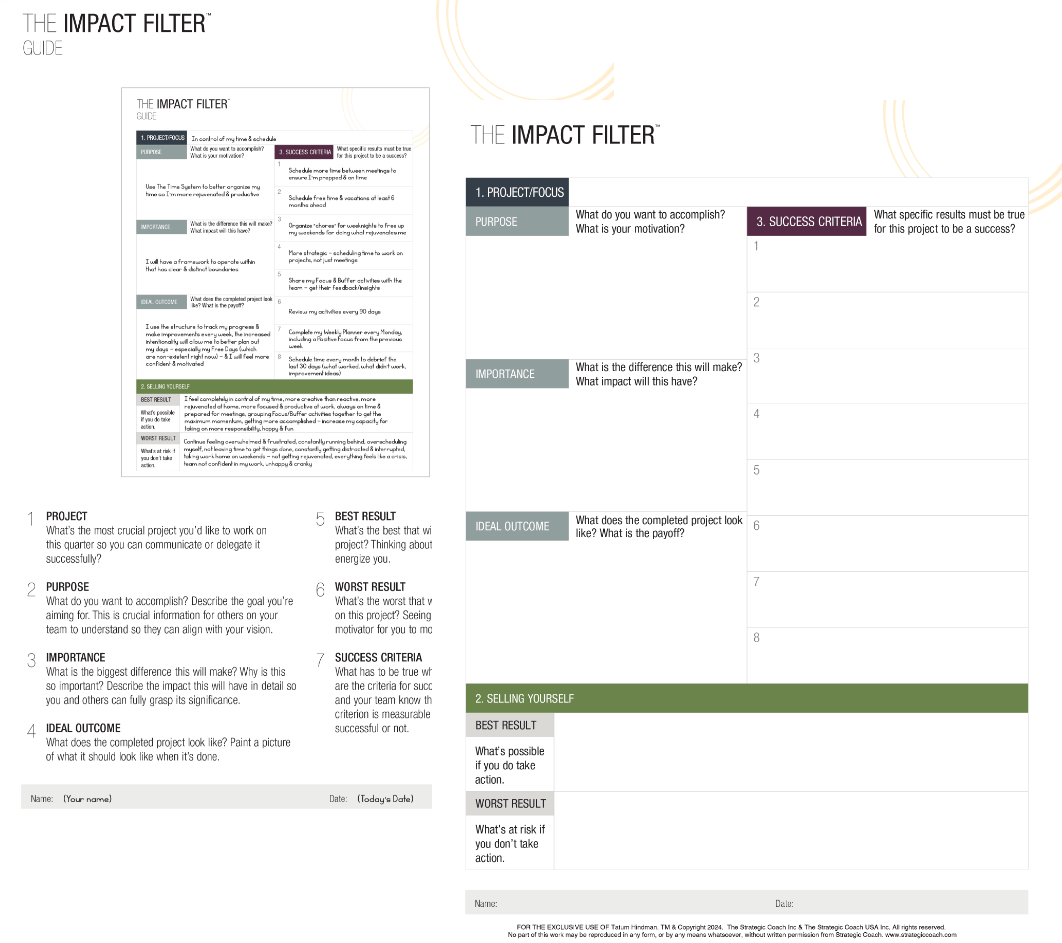

The Impact Filter

Dan Sullivan’s #1 Thinking Tool

Are you tired of feeling overwhelmed by your goals? The Impact Filter™ is a powerful planning tool that can help you find clarity and focus. It’s a thinking process that filters out everything except the impact you want to have, and it’s the same tool that Dan Sullivan uses in every meeting.